Aligning Human Intelligence with AI An executive introduction to the white paper. The Fifth Revolution: Empowering Human Intelligence in the AI Era

1. The boardroom problem no one names

Across sectors, executive teams are making the same move.

They approve investment in a new AI platform, run a series of pilots, sit through slick demos,

and 12–18 months later discover… not much has really changed. A handful of enthusiastic

teams use the tools. A few processes are faster. But core productivity, decision quality,

customer experience and risk profiles look much the same.

Meanwhile, the workforce feels anxious. Some people worry they are being automated out.

Others quietly ignore the tools because they do not trust them, or do not understand how AI fits

into their day-to-day responsibilities.

It is tempting to conclude that the technology “isn’t ready” or that “our people just aren’t digital

enough”.

But the real issue is usually simpler and more hopeful:

The organisation has invested in artificial intelligence (AI) before it has aligned and equipped

its human intelligence (HI).

This introduction presents a way to fix that — by treating Human Intelligence (HI) as the starting

point and anchor of your AI strategy, not an afterthought.

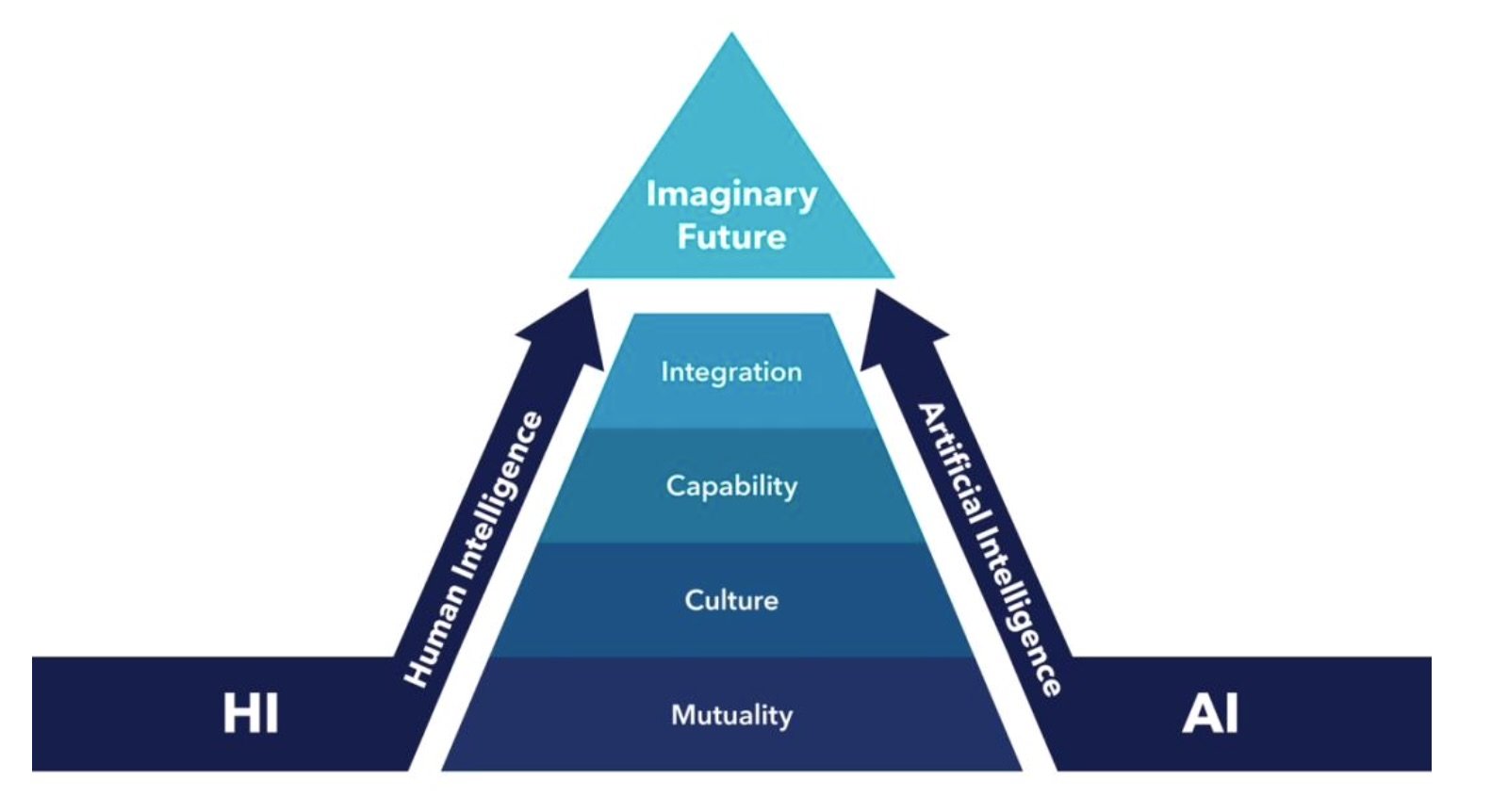

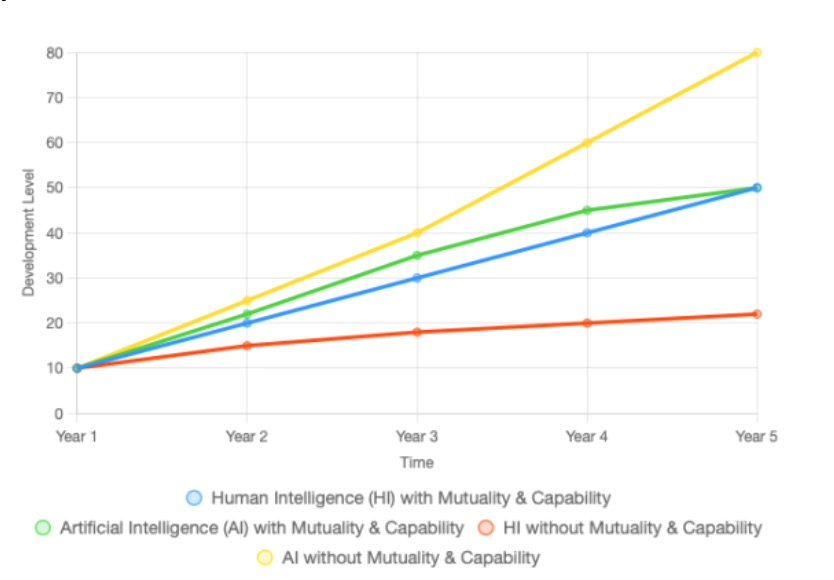

Figure 1 – HI and AI over time

When AI races ahead of HI, organisations over-invest in technology that people cannot fully use, eroding trust and wasting resources. When HI and AI are developed together, on a foundation of trust and capability, value and confidence grow side by side.

To address this challenge, the white paper that this introduction supports sets out a structured way to align human intelligence with AI through four sequential pillars: mutuality, culture, capability and integration. It combines global and Australian perspectives, including linkages to Australia’s AI Ethics Principles, to show how organisations can pursue a human-centred AI future in practice.

2. What we mean by Human Intelligence (HI)

When we talk about HI, we are not contrasting “clever people” with “clever machines”.

Human Intelligence in this context is the collective ability of your organisation to:

• Sense what is changing in your environment

• Make meaning from complex, ambiguous information

• Exercise judgement about risk, ethics and value

• Act together in ways that build trust and performance over time

It is the skills, relationships and culture that allow your people to use technology well — not just

tolerate it.

AI can now process vast data sets, automate complex tasks and support decisions in ways that

were impossible even a few years ago. But without the right HI, this power quickly becomes:

• Ethically risky – decisions emerge that no one feels they own

• Operationally fragile – systems look impressive but are rarely trusted at the front line

• Strategically underwhelming – “shiny tools, shallow impact”

Organisations that deliberately align HI and AI, by contrast, are already showing that you can

gain both productivity and trust: faster decisions, more resilient teams and stronger

stakeholder confidence.

3. Why AI goes wrong when HI is an afterthought

Most AI disappointments follow a predictable pattern:

• Start with Integration

The organisation buys a powerful AI platform. Attention goes to implementation plans,

technical integration and vendor timelines. It looks like a classic technology roll-out.

• Hit a Capability wall

Once the roll-out starts, it becomes clear that people do not yet have the skills or

confidence to use the technology well. Training programmes are launched, often late

and under pressure.

• Run into Cultural resistance

Even with training, adoption stalls. The culture is not set up for continuous learning,

experimentation or constructive challenge. People are reluctant to admit what they do

not understand and worry about what AI means for their roles.

• Confront a Trust deficit

As resistance surfaces, leaders realise there is limited trust in the motives behind the

transformation. Many employees feel AI is something being done to them, rather

than with them. Rumours, fear and scepticism flourish.

By this point, Integration, Capability, Culture and Trust have all become barriers to progress

instead of enablers. Leaders often respond by pushing harder on the technology, tightening

deadlines or reshuffling projects — which further erodes confidence.

The good news: if you change the sequence, the same elements can become powerful

accelerators.

The HI–AI sequence that works

Our work and the research behind the white paper point to a simple but powerful insight:

The order in which you build HI–AI capability matters as much as the technology you

choose.

4. We frame this as four pillars, designed to be built in sequence:

Mutuality – establishing shared purpose and trust so that AI is seen as something done with people and stakeholders, not to them.

Culture – creating an environment where learning, experimentation and honest challenge are safe and expected.

Capability – building the skills, judgement and confidence people need to use AI well, ask better questions of it and stay accountable for outcomes.

Integration – embedding AI into strategy, governance and day-to-day workflows so that it supports real decisions and value creation.

When you start with mutuality and culture, capability building sticks. When capability is in

place, integration becomes faster, safer and more impactful. Reversing the order — starting

with integration and hoping the rest will follow — is what produces many of today’s stalled or

superficial AI programmes.

5. Two contrasting futures

You can think of HI–AI integration as two possible trajectories over the next five years.

• In the technology-first trajectory, AI capability accelerates rapidly while human

understanding, trust and skills lag behind. Investments mount, results are patchy, and

scepticism grows. The organisation captures only a slice of the potential value while

accumulating reputational and ethical risk.

• In the HI-aligned trajectory, you invest first in mutuality and culture, then in capability,

and finally in scaled integration. Humans and AI progress in tandem. AI becomes a

trusted partner in decision-making, not a black box. Value compounds over time as

lessons are captured and shared.

Both scenarios involve investment. The difference is whether you are paying “school fees”

reactively through failed projects and lost trust — or deliberately, by building the human

foundations first so that each AI step has somewhere solid to land.

6. What this means for executive leadership

For executive teams, the central question is shifting from “What AI tools should we buy?” to:

“How do we design a journey where our people and our AI systems get better together?”

That journey starts with a few clear leadership commitments:

• We will treat AI as a human-centred transformation, not just a technology upgrade.

• We will invest in trust, culture and skills before we scale the tools.

• We will measure success not only in efficiency, but also in resilience, learning and

stakeholder trust.

From here, practical next steps typically include:

A rapid diagnostic across the four pillars – understanding where your organisation

stands today on Mutuality, Culture, Capability and Integration.

Focused conversations with key stakeholders – unions, frontline staff, customers,

regulators – to surface concerns, hopes and ideas about AI.

A sequenced roadmap that deliberately stages AI investments so that each pillar

supports the next, rather than scrambling to retrofit people into technology decisions

already made.

7. About the white paper behind this introduction

This introduction is a narrative doorway into the ideas in the white paper The Fifth Revolution:

Empowering Human Intelligence in the AI Era – Aligning Human Intelligence with Artificial

Intelligence Integration.

The white paper provides the deeper foundation for the four-pillar model, including:

• Case studies of successful and unsuccessful HI–AI integrations

• Research on productivity, skills and organisational trust

• Practical tools for assessing readiness and designing interventions at each stage

Used together, this introduction and the white paper are designed to support executive teams

who want to turn AI from a source of anxiety and mixed results into a disciplined, human-

centred engine of performance, resilience and trust.

If you would like to explore how this applies in your organisation, the next step is a

conversation. The question is not whether AI will reshape your operating environment — it is

whether your Human Intelligence will be prepared to shape AI in return.